The Big Green Tackling Machine

About my submission to the 2024 NFL Big Data Bowl

The NFL’s Next Gen Stats are a game changer. Since 2017, the NFL has used RFID chips in players’ shoulder pads to gather a torrent of data. They track every NFL player’s location, speed, and acceleration every tenth of a second on every play in every game. For reference, there went from being ~160 rows of data per game (one for each play) to over 600,000.

These metrics bring big data to sports analytics in a big way. What’s more? The NFL organizes a big competition every year called the Big Data Bowl, where they release this tracking data to the analytics community to unearth novel insights about the game. It’s a big deal.

This year, the 2024 NFL Big Data Bowl was about tackling, using data from Weeks 1-9 of the 2022 NFL season. In this post, I’ll share a high-level overview of my project to predict the tackle location on run plays and some lessons learned.

Submission Report - Detailed technical report and the official submission

GitHub Repository - Code and data

Project Overview

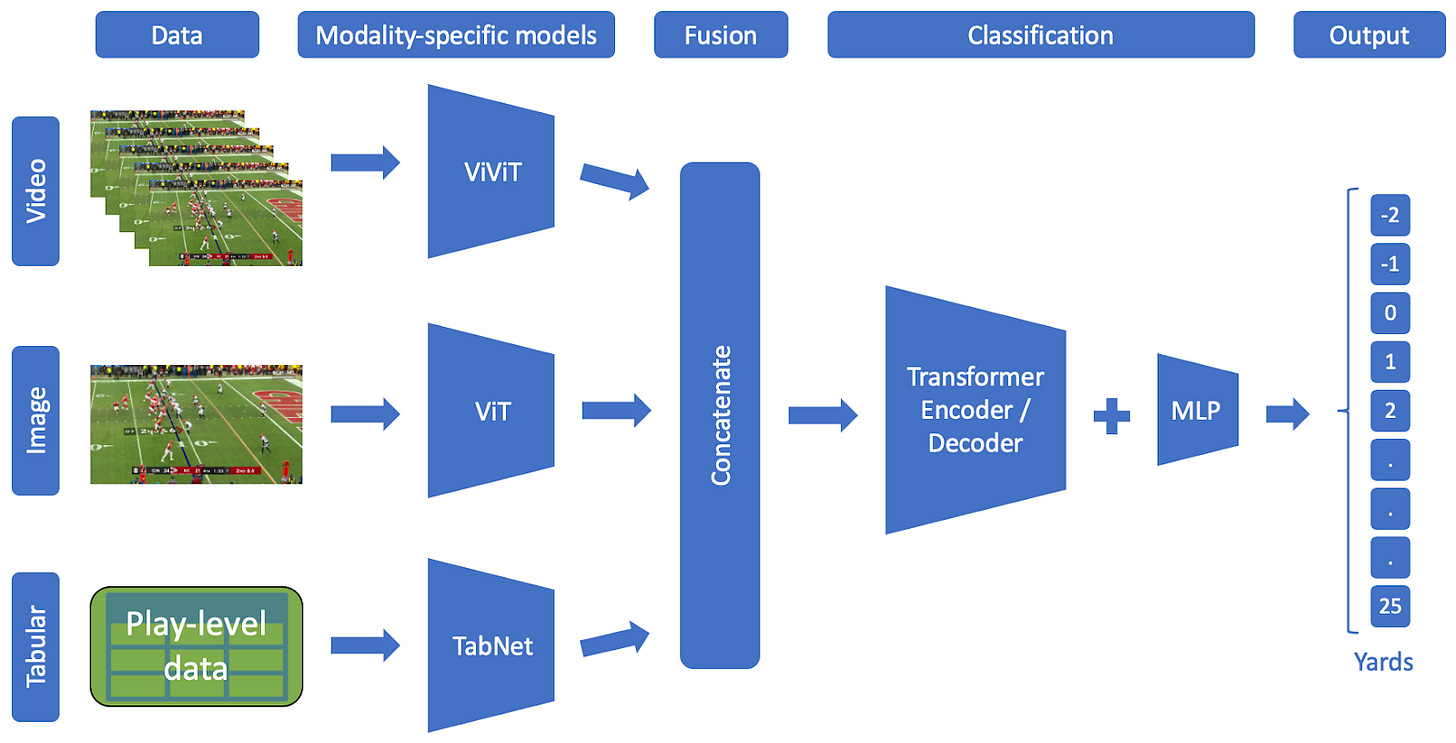

The goal of the project was to forecast the tackle location (yards past the line of scrimmage) on NFL run plays. With the expected tackle location on a play, coaches could make better play-calling decisions on the field, and teams could further analyze player and team performance. To predict the tackle location, we took a multi-modal approach, integrating three data streams: a video of the field up until the handoff, an image capturing the field at the moment of the handoff, and a suite of play-specific variables (i.e. quarter, defenders in the box, ball carrier, etc.).

Field Representation

We represented each play in the tracking dataset as a video, with each frame corresponding to a timestamp in the tracking data. Each frame represents the 120x53.3-yard football field in an image that is 120x54 pixels, with each pixel representing roughly a square yard on the field. Just as a standard "RGB" image has 3 channels per pixel (red, green, and blue), our image had 10 channels per pixel to represent various features in the tracking data.

Some of the channels include player and ball locations, the ratio of players on offense/defense in each pixel location, and the net velocity and force vectors at each pixel.

Modeling Approach

We built a multi-modal transformer to combine the data from each modality: the video of each frame of the field before handoff, an image of the single frame of the field at the moment of handoff, and supplemental tabular play-level data. We passed the video data into a video transformer (ViViT) proposed by Arnab et al., the image data into a vision transformer (ViT) proposed by Dosovitskiy et al., and the tabular data into a TabNet model proposed by Arik and Pfister.

Results

The multi-modal model outperformed all the modality-specific models on their own to achieve a continuous ranked probability score of 0.0127 (closer to 0 is better).

Here’s an example of the model’s output on an example 3-yard run play:

Project applications

I spoke with the Dartmouth Football team's Head Coach Sammy McCorkle, Senior Director of Football Operations Dino Cauteruccio Jr., and Director of Game Management & Analytics Aneesh Sharma ‘26 to hear their thoughts on the project. Based on our conversation, I could imagine this model being useful for NFL teams in three ways:

Game Planning and Strategy Development: By understanding where tackles are likely to occur, teams can modify their game plans to exploit weaknesses in opponents' offensive or defensive setups.

Player Performance Analysis: Teams could use the model to evaluate the performance of individual players or the team by comparing the expected vs actual tackle location for plays.

In-Game Decision Making: Coaches can use this data during games to make informed decisions on play-calling. Moreover, coaches can also use the expected tackle location to determine lineup changes if defensive or offensive players are having a particularly good/bad day.

A few reflections

I learned a ton from this project.

Deep learning methods: In a week, I scaled from learning about the basics of neural networks (re: this post) to understanding transformers and state-of-the-art multi-modal methods. One of the cool things is that our same multimodal architecture powers ChatGPT. I smiled when I saw this graphic from the Gemini report. Look familiar?

Problem representation is hard (and important): The most difficult part of this project was figuring out how to represent the field. Building the models wasn’t easy, but there was a ton of help thanks to open-source code. To represent the field, there were no precedents; I had to come up with this from scratch. The first version had 22 channels in each image!

There’s an art to building models: It took a lot of trial and error to tweak the model architecture (i.e. the number of hidden layers or transformer heads) to find the optimal model. Good ML engineers have heuristics on which knobs to tweak. I wonder whether deep learning models will become commoditized like out-of-the-box scikit-learn models.

Domain expertise is key: My director at Altice preached how important it was to understand the business factors behind the research question/data/models we were wrangling with. I applied those teachings to this project, meeting with the Dartmouth football coach to get his perspective on the research question. Coach McCorkle’s advice was invaluable, inspiring important features to include.

GPUs are awesome: It took me 12 hours to train the same model on my laptop that an NVIDIA A100 finished in 11 min. It was one thing to read about Nvidia’s stock ripping last year, it was another thing to feel the power of an A100 first-hand. The hype is real.

Some thank you’s

I’d like to thank Dartmouth '24s Asher Vogel and Andrew Koulogeorge for collaborating on an earlier version of the project for our computer science class. I would also like to thank Professor Soroush Vosoughi for his help in designing the multi-modal model architecture. A big thank you as well to Elijah Gagne and Anthony Helm from Dartmouth Research Computing for generously granting us access to NVIDIA A100 GPUs to train the models. Also, a shoutout to Michael Lopez for coming to Dartmouth to give the math department's Prosser Lecture about Next Gen Stats. His talk inspired us to take on this project. Lastly, I would like to thank Dartmouth Football Coach Sammy McCorkle, Dino Cauteruccio Jr., and Aneesh Sharma '26 for meeting with us to share their expertise on the research topic. Congrats to the Dartmouth football team for winning the Ivy League Championship, and go Big Green!